반응형

본 포스팅은 다음 과정을 정리 한 글입니다.

Custom Models, Layers, and Loss Functions with TensorFlow

www.coursera.org/specializations/tensorflow-advanced-techniques

지난시간 리뷰

[Tensorflow 2][Keras] Week 2 - Activating Custom Layers

본 포스팅은 다음 과정을 정리 한 글입니다. Custom Models, Layers, and Loss Functions with TensorFlow www.coursera.org/specializations/tensorflow-advanced-techniques 지난 시간 리뷰 2021.03.26 - [Artif..

mypark.tistory.com

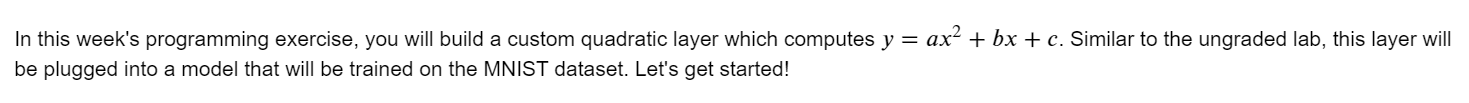

Week 3 Assignment: Implement a Quadratic Layer

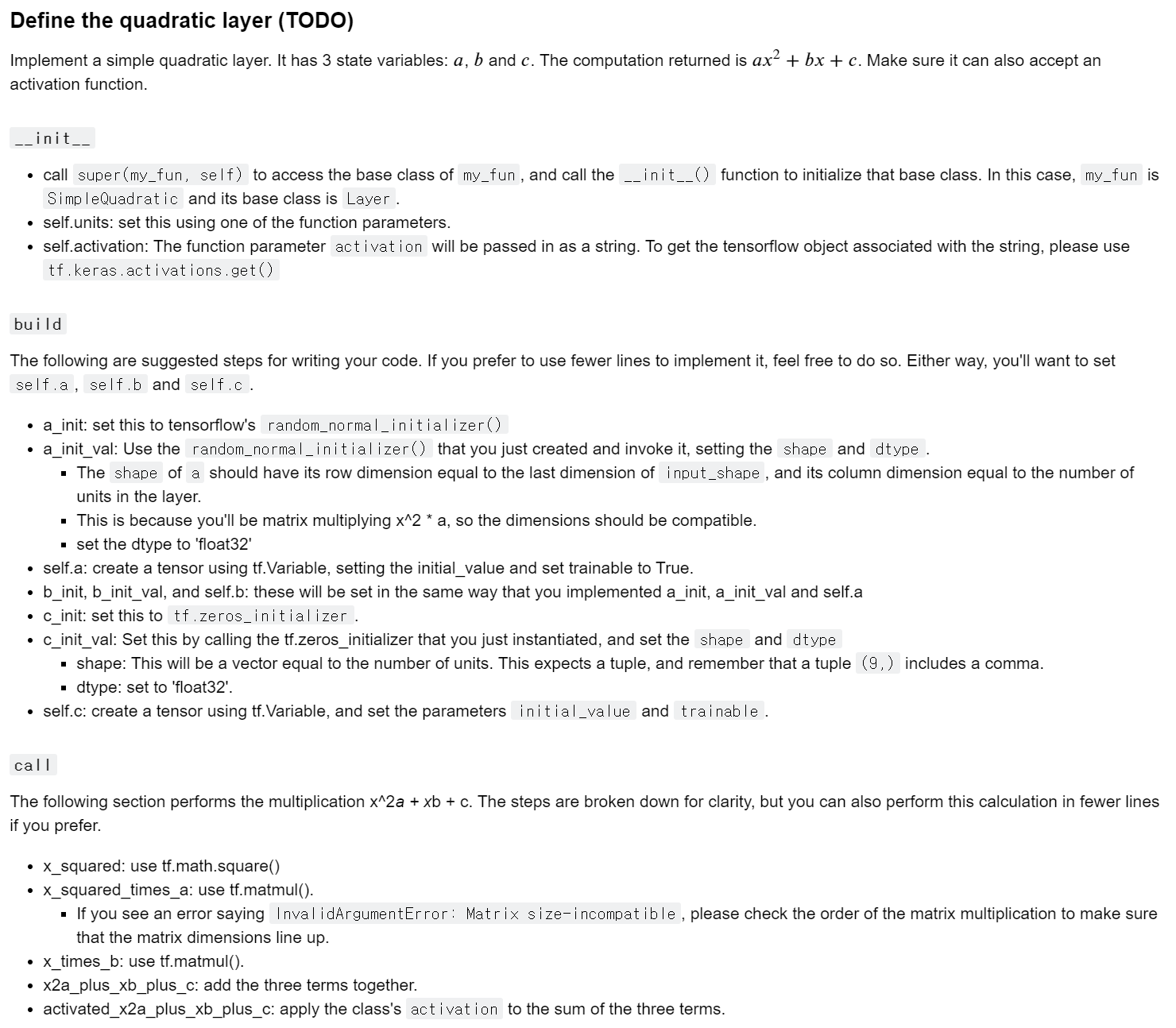

# Please uncomment all lines in this cell and replace those marked with `# YOUR CODE HERE`.

# You can select all lines in this code cell with Ctrl+A (Windows/Linux) or Cmd+A (Mac), then press Ctrl+/ (Windows/Linux) or Cmd+/ (Mac) to uncomment.

class SimpleQuadratic(Layer):

def __init__(self, units=32, activation=None):

'''Initializes the class and sets up the internal variables'''

super(SimpleQuadratic, self).__init__()

self.units = units

self.activation = tf.keras.activations.get(activation)

def build(self, input_shape):

'''Create the state of the layer (weights)'''

# a and b should be initialized with random normal, c (or the bias) with zeros.

# remember to set these as trainable.

a_init = tf.random_normal_initializer()

self.a = tf.Variable(name="kernel",

initial_value=a_init(shape=(input_shape[-1], self.units),

dtype='float32'),

trainable=True)

b_init = tf.random_normal_initializer()

self.b = tf.Variable(name="kernel",

initial_value=b_init(shape=(input_shape[-1], self.units),

dtype='float32'),

trainable=True)

c_init = tf.zeros_initializer()

self.c = tf.Variable(name="bias",

initial_value=c_init(shape=(self.units,),

dtype='float32'),

trainable=True)

def call(self, inputs):

'''Defines the computation from inputs to outputs'''

x_squared = tf.math.square(inputs)

x_squared_times_a = tf.matmul(x_squared, self.a)

x_times_b = tf.matmul(inputs, self.b)

x2a_plus_xb_plus_c = x_squared_times_a + x_times_b + self.c

activated_x2a_plus_xb_plus_c = self.activation(x2a_plus_xb_plus_c)

return activated_x2a_plus_xb_plus_c# THIS CODE SHOULD RUN WITHOUT MODIFICATION

# AND SHOULD RETURN TRAINING/TESTING ACCURACY at 97%+

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

SimpleQuadratic(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=5)

model.evaluate(x_test, y_test)

300x250

댓글